THE BIGGEST PROBLEM IN SOCIAL SCIENCE

Eyewitnesses; assault; experiments; academia; economics; police interviews; stress

Want to win a Nobel Prize? Simply solve the problem in this newsletter. Sadly, there’s no Nobel Prize in Psychology, but I bet I could convince them to give you one in Economics.

Let’s imagine a police officer faced with this situation: He’s investigating an assault that took place in the dockyards a few nights ago. He has an eyewitness but doesn’t know quite how far to trust her. She says she saw the entire incident. She describes the attacker in a certain amount of detail – approximate height and build, hair colour etc. This witness claims he was wearing a yellow top. The police officer has a suspect who answers the description. The suspect even owns a yellow top. Nevertheless, he has his doubts. It was dark at the time and the witness cannot have seen well. It was 3am. She was tired and slightly drunk. No surprise she’d been drinking: she recently got evicted and went through an unpleasant break-up. Because she suffers from chronic stress, she has been signed off from work with anxiety.

The police officer asks a psychologist, ‘How does stress affect eyewitness performance?’ If he’s expecting a straightforward answer, he has clearly not met too many psychologists. Here is something the psychologist will not say: ‘Stress affects eyewitness behaviour like thus-and-so. Your witness has a 75% probability of remembering height correctly; an 82% probability on the build; but as for the colour of the attacker’s top, forget about it’.

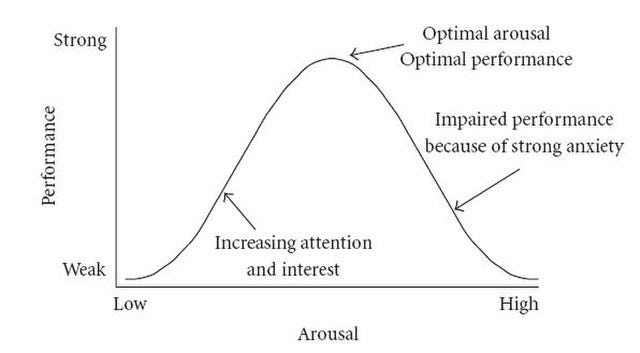

Our officer may feel aggrieved, especially when the psychologist goes on to refer to the Yerkes-Dodson Curve, which tells us that the relationship between stress and task performance seems to follow an inverted-U shape, such that performance is highest in situations of moderate arousal but tends to tail off when arousal is too low (those times when you’re just too bored to care) or too high (when you’re stressed out of your head). ‘Where on this Yerkes-Dodson curve was our eyewitness?’ the police officer asks. ‘How would I know?’ replies the psychologist.

Perhaps the police officer will begin to suspect he has wasted his time speaking to a psychologist in the first place.

But now consider the psychologist’s own dilemma. She may have carried out lots of research on stress and eye-witness behaviour. She may even be a renowned expert. But any given case presents unique problems of interpretation. The police officer wants guidance on a cause-and-effect relationship between stress and eyewitness accuracy. There is only one way to establish cause-and-effect relationships and that is to carry out a laboratory experiment. (If you’d like to know more about the reasons for this, and the mechanics of running an experiment in Psychology, please follow THIS LINK. I’ve laid it all out for you with immense care and attention!)

How could any psychologist possibly run an experiment to answer the officer’s question? It seems impossible. It’s difficult to imagine any ethics board in the world allowing a researcher to subject their participants to massive levels of stress like those they would encounter in real life eye-witnessing situations. After all, we psychologists, like medical researchers, have a duty of care to the kind people who volunteer to help us with in our research.

Know which two words in the last paragraph are the vital ones? - ‘Real life’. Real life is the concern here. Even if our psychologist could establish a cause-and-effect relationship that works reliably in the laboratory (they can’t), our police officer might wonder whether it will still hold in the docks at 3am with a drunken witness.

At this point, the psychologist might, theoretically, dig out some research into actual drunken eye-witnesses who happen to see dubious events occurring dockside in the wee small hours of the morning. She might look through the literature and try to establish consistencies. There won’t be much literature, for sure, but maybe she’ll find something usable: if not in the academic literature, maybe in the newspapers or, well, somewhere. This creates a new problem: the research will relate only to extremely specific examples of eye-witnessing indeed. All the suspects in the newspaper reports will have been wearing different clothing. Lighting surely varied. Also the number of distractions in the environment, the violence of the crime, and so on. Any or all of these variables might have an effect. How would our psychologist know?

She has struck the rocks of ‘ecological validity’. This is a jargon phrase, of course. It refers to the difficulty of establishing the extent to which findings in the laboratory (or any other controlled environment) reflect real-life situations, of the kind that our police officer might be interested in. In order to establish causal relationships, a scientist needs to control the variables. But they control things, the less similar to real life the situation becomes. And the more the situation does reflect real life, the less control the psychologist has.

When it comes to assessing the value of a piece of research, both control and ecological validity are highly desirable. But the more we have of the first, the less we have of the second.

Enjoying this newsletter? Go on, click here to buy me a coffee. I’d like that.

The problem isn’t exclusive to Psychology. Many areas of social science suffer in the same way. Suppose an economist wants to know whether quantitative easing reduces the value of cash money. It would be easy enough to test this cause-and-effect relationship in the sterile confines of a laboratory. Our economist could easily design such an experiment. Participants have to buy articles from each other, say, using some kind of fake currency. The economist pumps extra fake money into this Lilliputian economy and measures whether the price of the articles changes as a consequence. Perhaps the economist discovers some fairly clear-cut results, indicating a cause-and-effect relationship. But would those results still apply in the messy real world outside the laboratory? Who knows?

No one needs to worry about such problems in, say, Chemistry. Ecological validity is virtually no concern at all. Atoms and molecules behave the same way in the laboratory as they do anywhere else.

This is a big concern for anyone studying so-called ‘psycholegal issues’. Examples include eye-witness behaviour, police interview techniques, or jury decision-making. Researchers in these areas can hardly be expected to relax of an evening. They have far too much on their minds.

In the practical-minded spirit of this newsletter, let’s take a couple of real-world examples.

Once upon a time (1992), in a land far, far away (London), a band of magical characters called the UK’s Association of Chief Police Officers introduced something they called PEACE. PEACE spread through England, Wales, Canada and New Zealand (make up your own joke). It was a new approach to interviewing suspects: a response to a number of high-profile cases in which suboptimal police interviews had led to miscarriages of justice. We may look in more depth at PEACE in an upcoming newsletter, if you like.

PEACE – we might say - didn’t happen all by itself (does it ever?) It was accompanied by a training course for interviewers which was intended to teach police officers valuable new skills. This is all very commendable, but of course the difficulty is in knowing just how well the training worked. Certainly, there are reasons to be optimistic. For one thing, we hear less about police brutality and ‘interrogations’ now than perhaps we did before.[i] Police interviews in the UK seem in many respects better and more effective than they used to do, before PEACE came along. The key word there is ‘seem’.

How can we be sure? A lot of time has gone by since PEACE first appeared. The police officers who are using it now are not the same as the ones who were working before it was invented (they may even be their sons or daughters). Indeed, police culture in general, we might suspect, must have changed a lot in the last three decades or so. While some psychologists have certainly found improvements, we could only be really confident about their causes if we were to conduct a laboratory experiment. And how on earth would we do that?

Critics might find reasons for scepticism. In 2011, psychologists compared real-life suspect interviews carried out across six police forces by two groups of police officers. One group had taken a training course; the other had not. ‘Interviews’ the psychologists remark, ‘were generally of average standard [….] further improvement in training is needed’. In fact, the psychologists found that the only difference PEACE training made, once officers ventured out into the real world, was that the trained officers’ interviews took longer.[ii] That was it. The course in question was pretty short, though. It lasted only a week. A longer, three-week course seemed to lead to more durable improvements. Even so, the theorists behind PEACE must have been frustrated to learn that the more complex skills the trainees learnt had vanished within a year. Sometimes our abstractions struggle in the face of reality.

Of course, these are the sorts of discovery you can make only after rolling out an initiative in the real world. After all, no one can (or wants to) run a laboratory experiment that lasts a whole year. Certainly no organisation would be willing to fund it. So what can we be expected to learn?

Well, we’ll address that question in our next newsletter, Crime & Psychology fan! Make sure you are here when we start looking at the implications of ecological validity for our studies of jury-decision making and assault. Check back in next Wednesday: we wouldn’t want you to miss out! Alternatively, click here to buy me a coffee. Go on.

You can link to Part 2 of this post here.

Yerkes-Dodson Curve courtesy of WikiMedia Commons.

[i] Milne, R & Bull, R: ‘Witness interviews & crime investigation’ in D Groome & M Eysenck (eds) An Introduction to Applied Cognitive Psychology, 2nd edition, Routledge, Abingdon, 2016, pp175-197

[ii] Clarke, C; Milne, R; & Bull, R: ‘Interviewing suspects of crime: The impact of PEACE training, supervision & the presence of a legal advisor’, Journal of Investigative Psychology & Offender Profiling, 8(2), 2011, pp149-162 Quote from the Abstract.

Really interest, and would like to know more about peace and how it spread.

In education professional development (aka) training suffers from similar issues. The best kind is enduring and has multiple points of contact through a longish period of time. It seems that training for peace would require a similar set of interventions... with trainers returning periodically and continuous evaluation/reflection. Very hard to do without lots of resources and buy-in... and all of this is assuming the thinking behind peace is a good one.